Leading social media platform previously found to be the worst for users mental health has spoken about their new Wellbeing Team

In 2017, The Royal Society for Public Health (RSPH) called Instagram the worst social media network for mental health and wellbeing.

Further research from Carmen Papaluca of Notre Dame earlier this year revealed worrying connections between Instagram usage and negative mental health amongst younger, female users. Teens and young women in their early 20s were found to have their body image negatively impacted by their use of the app, whilst women in their mid 20s expressed higher feelings of inadequacy about their lifestyle and work.

In March 2018, a further UK study suggested tween girls using social media reported lower levels of wellbeing in their teens when compared to those who interacted less with one of the world’s largest social media platforms.

With so much negative press and a growing association with anxiety and depression as well as bullying and fostering negative body images amongst users, Instagram appears to be working to tackle some of these issues and concerns head on.

Former Twitter vice president of revenue and product, Ameet Ranadive, announced his new position as Director of product for their Wellbeing Team in December 2017. Focusing primarily on preventing spam, abuse and harassment, Ranadive’s appointment appears to have been one of the first in Instagram’s move towards creating a more positive community for users.

1/ I'm excited to share the news that I have decided to join the product leadership team at Instagram!

— Ameet Ranadive (@ameet) 8 December 2017

Head of fashion partnerships, Eva Chen, spoke about the new Wellbeing Team to Bloomberg last week.

“[The team’s] entire focus is on the wellbeing of the community...making the community a safer place, a place where people feel good, is a huge priority for Instagram”

In recent months, Instagram have introduced a number of features to support their move towards creating a safer, more feel-good community. These have included new content moderation tools that automatically filter inappropriate comments, as well as giving users the ability to create their own comment filters.

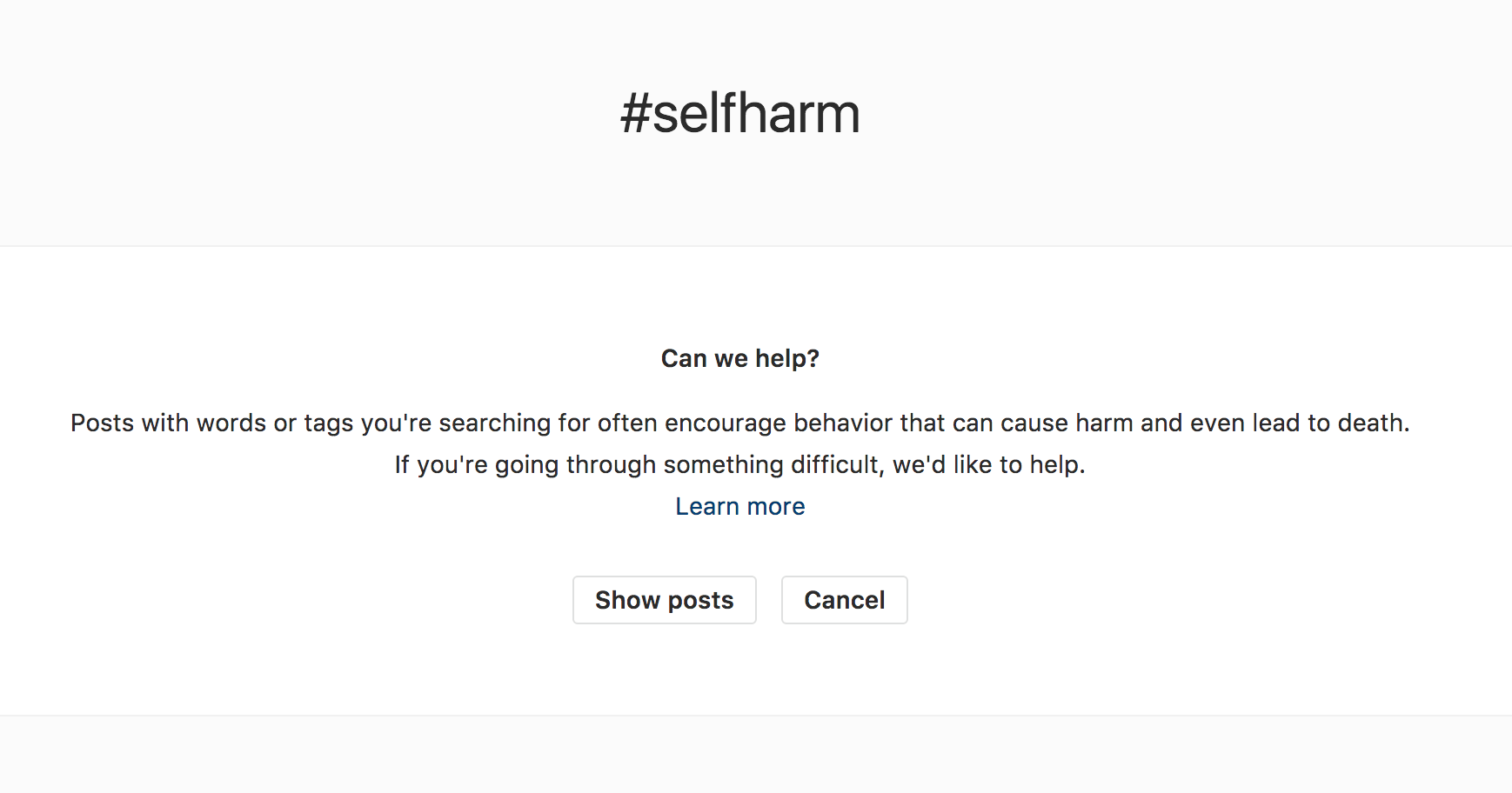

Instagram also have teams that review anonymous reports of posts where users are concerned individuals may need mental health support, putting them in contact with organisations that can offer aid. The popular social media platform also now displays a ‘Can we help?’ message when sensitive hashtags are searched for.

Despite recent positive changes, Instagram have yet to comment publicly on the impact of these new measures. The composition of the Wellbeing team has also not been publicly announced (for example, is it primarily tech workers, or does it include or consult psychologists or researchers?).

The social media platform have also not yet acted on specific recommendations from the RSHP around recommended warnings for users triggering during excessive use of the app, or the implementation of watermarks on photoshopped images that could potentially be damaging to young people’s self-esteem or mental health.

Despite the numerous studies suggesting social media may have a negative impact on mental health and wellbeing, several recent studies suggest fears around social media may be misplaced. Recent figures from YouGov on behalf of Barnardo’s suggest other pressures may have a greater negative impact on young people. Early research from the University of Vermont and Harvard University in late 2017 went as far as to suggest Instagram could be used as a beneficial diagnostic tool for users mental health.

For more information on mental health and wellbeing, visit Counselling Directory.

Comments